Rise of the machines.

It’s true! It’s really happened - Sam Illingworth (Our Tutor for non-SciArt peoples) has been taken by the machines! Well a machine. Well, a small, red - probably toy, robot.. called Rob. But the point is - it has him, and he’s done for, unless we give into it’s demands. What? We don’t negotiate with… Look I think that’s missing the point - we have to communicate an emotion to the Robot! In groups.

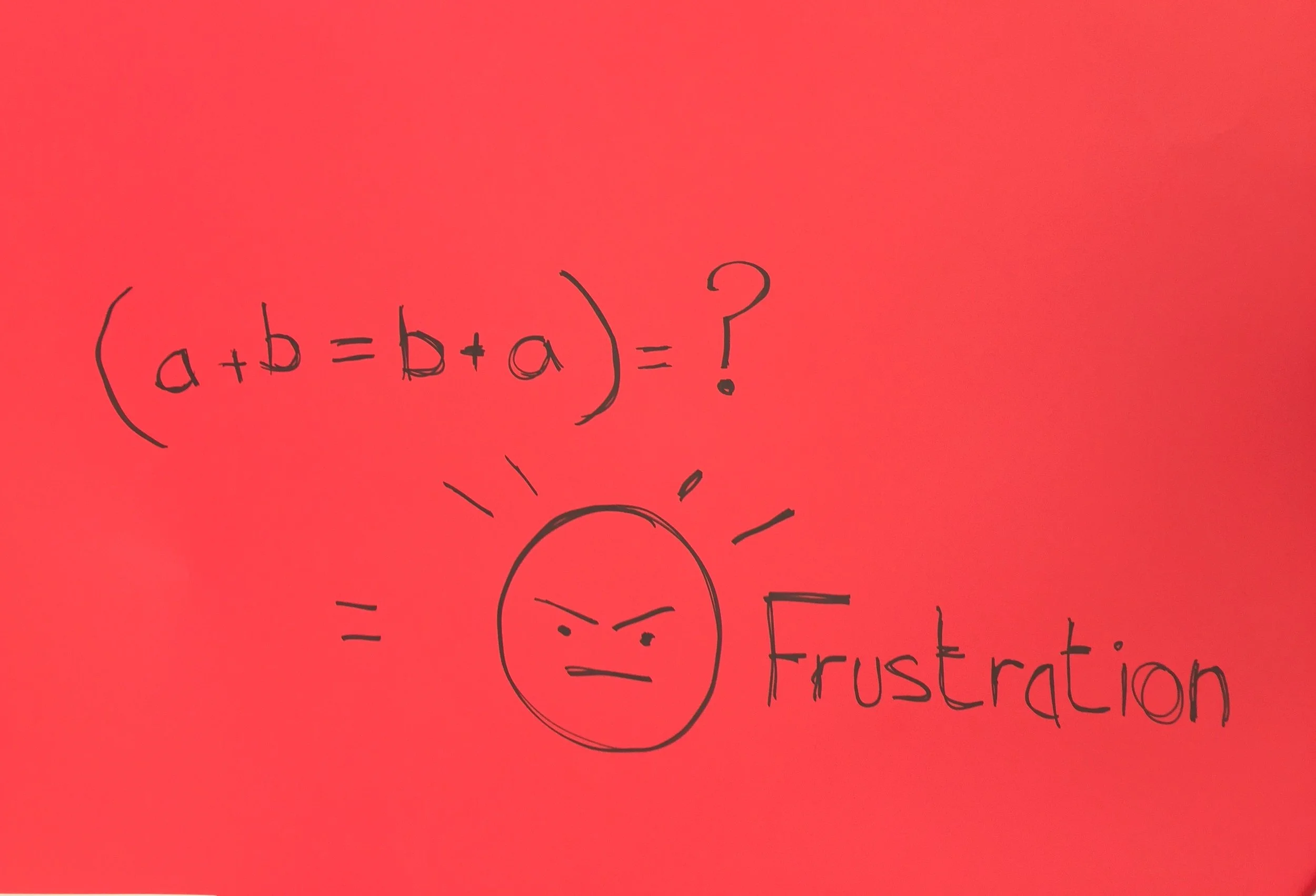

And here’s the rub. Because whilst communicating emotions in general can open up a whole can of worms, normally we get across what we mean by a whole series of connections and links that build on prior knowledge: “It’s a bit like…”, or “you know when…” or more straight-forwardly “sort-of...”. We live on the edge of a metaphorical cliff, and can often fall off when we mix our drinks. Emotional states are often abstracted or symbolic - often using colour and perspective to indicate feelings or moods that may be familiar to us. But to a robot? Especially as this task requires us to communicate just one emotion - not a range of emotions - reducing down the options for associative, or empirical demonstration (Anger’s a bit like frustration only… etc).

Reluctant Heroes.

As a group we spiralled down the rabbit hole - whether we took the blue or the red pill is fuzzy (though surely that suggests we took the blue one - right?), we considered the use of colours - so how do those pills create an emotional context for the choice? After all if we choose the red and grasp hold of reality - are we associating red with an abrupt experience, with a passion for the truth, with lust and love - or even for a romantic view of life? And as for the blue - with coldness, with reason and dispassion, or with a numbed - hallucinatory state?

We discussed trying to generating a response from the Robot - considering the option of asking a question, or questions, to provoke an emotional response, our own Voight-Kampff test if you like?…

fig 1: Neo eye view. Lana & Lilly Wachowski (as the Wachowski Brothers), 1999: The Matrix. Warner Bros

Scott, Ridley (dir). 1982: Blade Runner. Warner Bros.

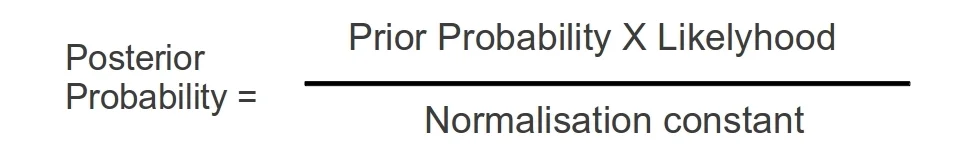

Then we tried to get technical - researching the use of Bayesian code in the creation of learning programmes. This a form of code based on Bayesian probablilty, used in developing artificial intelligence - specifically in the development of a learning capability. That is to say:

fig 2: Bayes rule - simplified: Bayesian Adventures,Bayesianadventures.wordpress.com, https://bayesianadventures.wordpress.com/2014/03/30/bayes-theorem-and-the-monty-hall-problem/ (1/2/17)

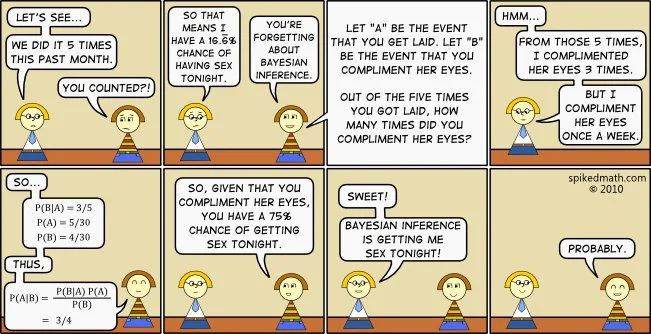

The theory is used to explain the probability of events in the future in relation to the events that have taken place. Or to put it another way:

fig 3:Bayesian probablity even more simplified: Cartoon (Bayes and sex) from

Spiked Math Comics Spikedmath.com //http://spikedmath.com/226.html (1/2/17)

Our thinking was to adapt this 'learning capability' in order to pre-programme the robot with the capability to develop an emotional understanding:

We found difficulty with the first options - based around the vagaries of association embedded in the relations of colours to meaning, and on the experiential disconnect that the generation of a feeling might have with the understanding of the experience. The time we had left us little time to synthesise the coding information, at least in a way that would let us demonstrate a solution to the task (I found these images later on). In general the task managed to generate different emotions in us - primarily through time and achievement pressure we found ourselves becoming more frustrated.

A Theory of Everything?

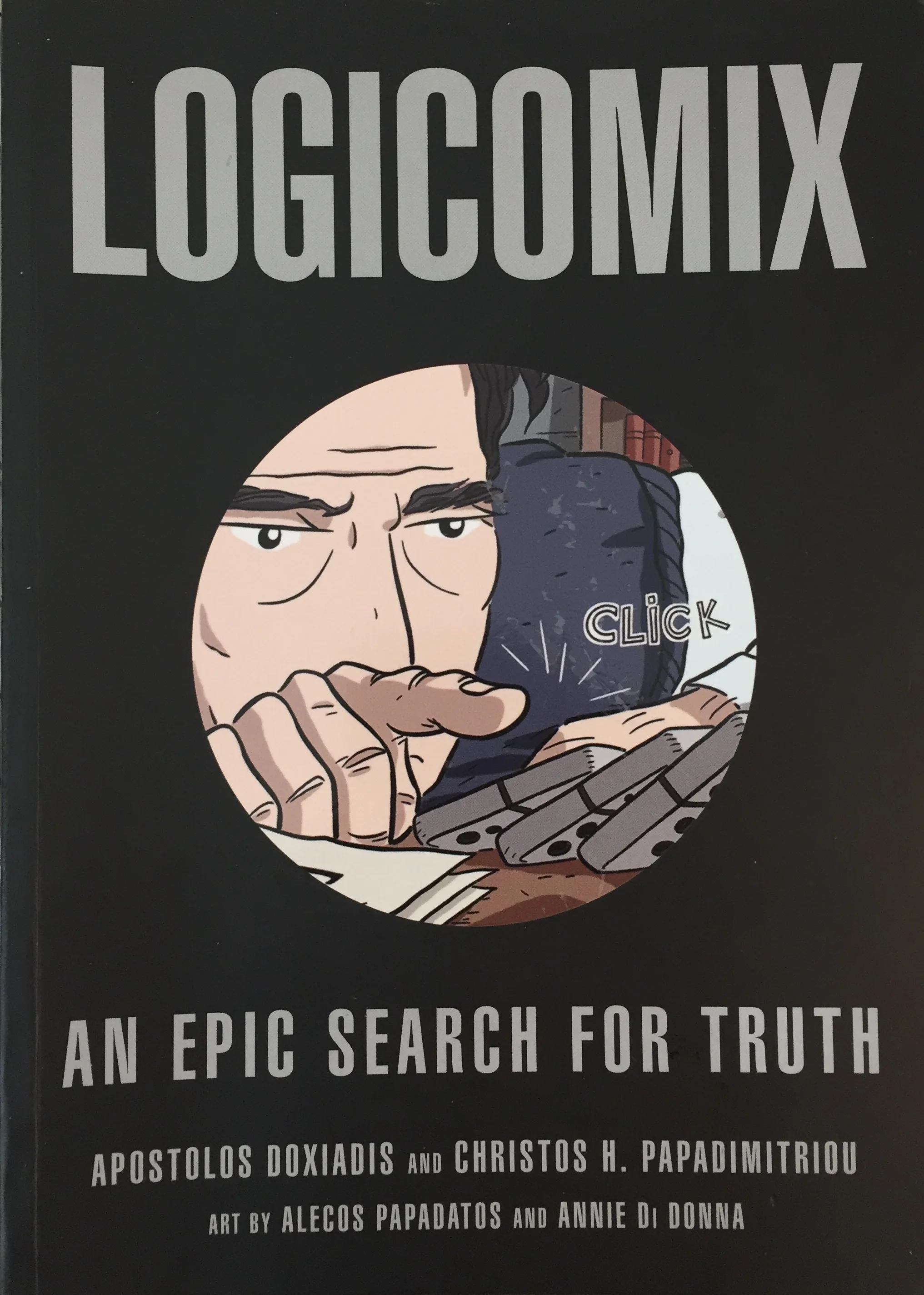

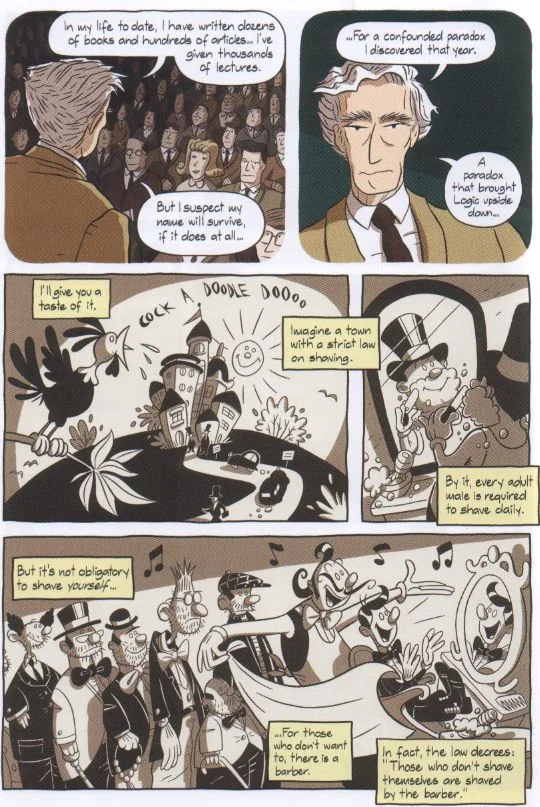

fig 4:Doxiadis, A & Papadimitriou, C; Art: Papadatos, A & Di Donna, A. 2009: Logicomix. London, Bloomsbury. Cover

My response was to connect back to the graphic novel Logicomix - wherein we are told the “tragic” story of Bertram Russell’s attempt (and failure) to prove the logical foundations of Maths. Oh - and there’s no sarcasm around those quotation marks - the search for truth, and the subsequent failure and despondency are seen in the context of Greek Tragedy. The idea being that Mathematics is built on key guiding principles that allow equations, formula, algorithms and so on - to describe and explain the universe around us; principles that can’t be logically demonstrated - what we call “axioms”. Very nice - but where is this going?

To me this sense of tragedy, this disconnect in logical process was something that a Robot could relate to. The now cliched, “does not compute”, from Lost In Space is a cry of anguish, a shriek of frustration from the ordered mind in a moment of cognitive dissonance with an imperfect universe. We then wove this into our suggestion.

The Rescue.

Collaboratively then we opted for a three part strategy:

- Upload an AI code

- Use red paper to reinforce the meaning

- Show the following equation (fig. 5)

fig 5:Illogical axiom = symbol = emoji/sign (group design/solution: Damyon Garrity, Rebecca Bennett, Karen Lawrenson & Tony Pickering)

The idea behind this was to convey a formula that is an illogical axiom (according to Wikipedia), and suggest that the intellectual itch that it sets up in reasoned thought is akin to the emotional feeling of frustration.

Other rescue attempts

Other groups explored the issues of communicating a single emotion: through autonomous drawing; through identifying the disconnect between the appearance and feeling of emotions, in making the robot have to make a random choice to experience feeling; and through exploring voice recognition and computer understanding in an online dating profile. These projects echoed our own issues with identifying clarity in communicating a single emotion, and the issue of a development context to place emotions in (what we think of as teenage I guess).

Um…

So we come back to the problem of experiential learning and learning by association - though our solution did hope to convey the 'sensation' using language that does not depend on prior emotional understanding. Additionally the results raise many assumptions about the response to such a logical quest - assumptions that may only be answered by consideration and investigation of, and into, human physiology and the fluctuations in the chemistry of the brain. In this way one question that pops up must be the potential for a new system or classification of robotic ‘emotional’ states. After all why do we make the assumption that 'emotion' is a constant term - why should it be the same in different forms of consciousness? And if this is the case - do we consider the canons of art, music story, myth literature and gossip as a taxonomy of emotions, that we as humans, can access? Kind of hormonal and emotional training manuals (or stabilisers) if you like? Will new intelligences need a similar body of work to investigate a new sense of being - or of mind?

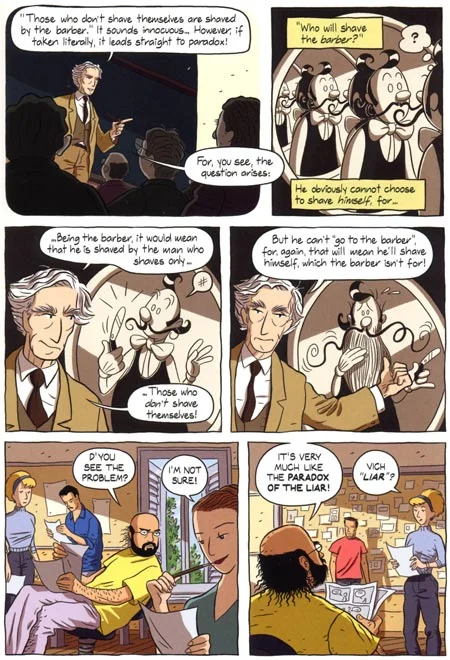

fig 6: Russell's Paradox p.164: Logicomix.

fig 7: Russell's Paradox p.165: Logicomix.

Meanwhile my mind drifts to consider the aesthetics of 'the equation'. The design and construction of formula and equations consider notions of balance and symbols. A normal pattern in equations is to substitute one sign (that is a signifier and a signified) for another that signifies multiple or varied sets of which the value used in a particular answer is but one: thus 'where X = ?'; in this sense the equation is constantly in need of reassertion, of clarification.

We drift here into dangerous territory, and the mathematical notion of sets - again harking back to Logicomix and Russell's paradox that brought down the house of logic where he dwelt (see fig 6 & 7). So on the one hand we have the semiotic gymnastics of the meaning (an overlap with critical literary and cultural theory that I may explore in a future blog), and on the other we have patterns and layout - the hieroglyphs and handwriting of the mathematical and theoretical proof. The workings of the mind in two dimensions- straining to burst into three - or three thousand and three. Interchanging symbols for symbols - using "?" and 😊 was our way to try and 'flesh' out an understanding of emotion, and perhaps indicates our need as individuals for continual contextual reference and reinforcements. More than this, however, there is scope to use this understanding of semiology to explore meaning - whether as pastiche or parody - a Banskyisation of Einstein (E=MC🔨 perhaps?), or maybe using the transience of the white or chalk-board to explore the writing and rewriting of the world in art-performance?

Aftermath: Synthetic emotions

After the final presentation Rob - the Robot - informed us that we had all failed. We were then told to consider how this made us feel, and to post it on the group Facebook page (which I can't link to). Though, as a group, we were disappointed - we started to consider to what extent this feeling was genuine. That is to say the sentiment was professional - an 'ego' reaction in relation to 'the super-ego', but that the knowledge that Sam was in reality was safe meant that our responses were in many ways 'synthetic emotions'. Other responses voiced anger, apathy, and even the desire to see the rescue through to the end and to find the missing Sam. This was juxtaposed with feelings of ambivalence predicated on the 'non-reality' of the situation.

ZHANG, S: Visual Expressivity Analysis and Synthesis for a Chinese Expressive Talking Avatar (PhD proposal). Beijing. Department of Computer Science & Technology Tsinghua University. http://media.cs.tsinghua.edu.cn/~szhang/EN/Research/Research%20Proposal.htm (1/2/17)

Synthetic emotion was an idea that linked intriguingly back to the workshop - for here we were trying to generate emotional reactions that we could not directly connect with, something we had been trying to communicate to the Robot. I wanted a visual image of this concept and quickly found fig. 8. The challenges here are in authenticity and consciousness; for what I see here is an avatar - or computer generated representation of human emotion. Though the facial movements have been programmed to be recognised, they do not come across as 'real', but instead mimic the human - akin to the way my phone camera thinks paintings of portraits are real people, and zooms in on the face. Stanislavski's acting theory also comes to mind [Stanislavski, K. 1936/1988: An Actor Prepares, London, Methuen Drama] - his techniques of "emotional memory" and the "magic if" - are, after all, ways to recreate 'real' emotional responses in an 'unreal' situation. Can we therefore see a Robot, or computer as an 'actor' of the human? Or should we say that they are distinct? Use of the word 'real' is of course problematic - especially if we can develop the computer - or Robot (and immediately I'm aware that these terms are not synonymous) consciousness, in which case these 'synthetic emotions' become valid but other reactions...

Oh... Sam's fine by the way... I mean he's alive anyway. *Coughs: Stockholm, cough*.